A Comprehensive Survey on Trustworthy Graph Neural Networks: Privacy, Robustness, Fairness, and Explainability

Published:

Summary: We give a taxonomy of the trustworthy GNNs in privacy, robustness, fairness, and explainability. For each aspect, we categorize existing works into various categories, give general frameworks in each category, describe the details of representative and state-of-the-art methods, and discuss future works.

By Enyan Dai, Tianxiang Zhao, and Suhang Wang

Graphs-structured data are pervasive in real-world such as social networks, traffic networks, knowledge graph and protein-protein interaction networks. Graph Neural Networks (GNNs) extend neural networks for graph-structured by aggregating the neighborhood information to update the representations. The message-passing mechanism of GNNs has lead to great success in modeling graphs. For instance, Pinterest has applied GNNs for their image recommendation systems. There are also efforts in deploying GNNs for credit risk estimation. However, similar to other deep learning models, there are several issues of trustworthiness of GNNs, which have raised tremendous concerns:

- Privacy and Robustness are not guaranteed in GNNs. GNNs are vulnerable to privacy attacks that steal the private data information or adversarial attacks that can fool the model. For example, hackers can utilize outputs or node embeddings of GNNs to infer node attribute information and friendship information in a social network. They may also fool the deployed GNNs in credit risk estimation for fraud by injecting malicious links or nodes, causing significant financial loss to individuals, institute and the society.

- GNN models themselves have problems in fairness and explainability. More specifically, GNNs is proven that can magnify the bias in the data, resulting discrimination towards genders, races, and other protected sensitive attributes. Due to the high nonlinearity of the model, predictions from the GNNs are difficult to understand, which largely limits the applications of GNNs.

Those weaknesses significantly hinder the adoption of GNNs in real-world applications, especially those high-stake scenarios such as finance and healthcare. Therefore, how to build trustworthy GNN models has become a focal topic. In our recent survey paper [6], we give a comprehensive review of existing trustworthy GNNs in the computational perspectives of privacy, robustness, fairness and Explainability and discuss promising future works. In this blog, we briefly introduce the structure and covered topics of each aspect in the survey.

Privacy

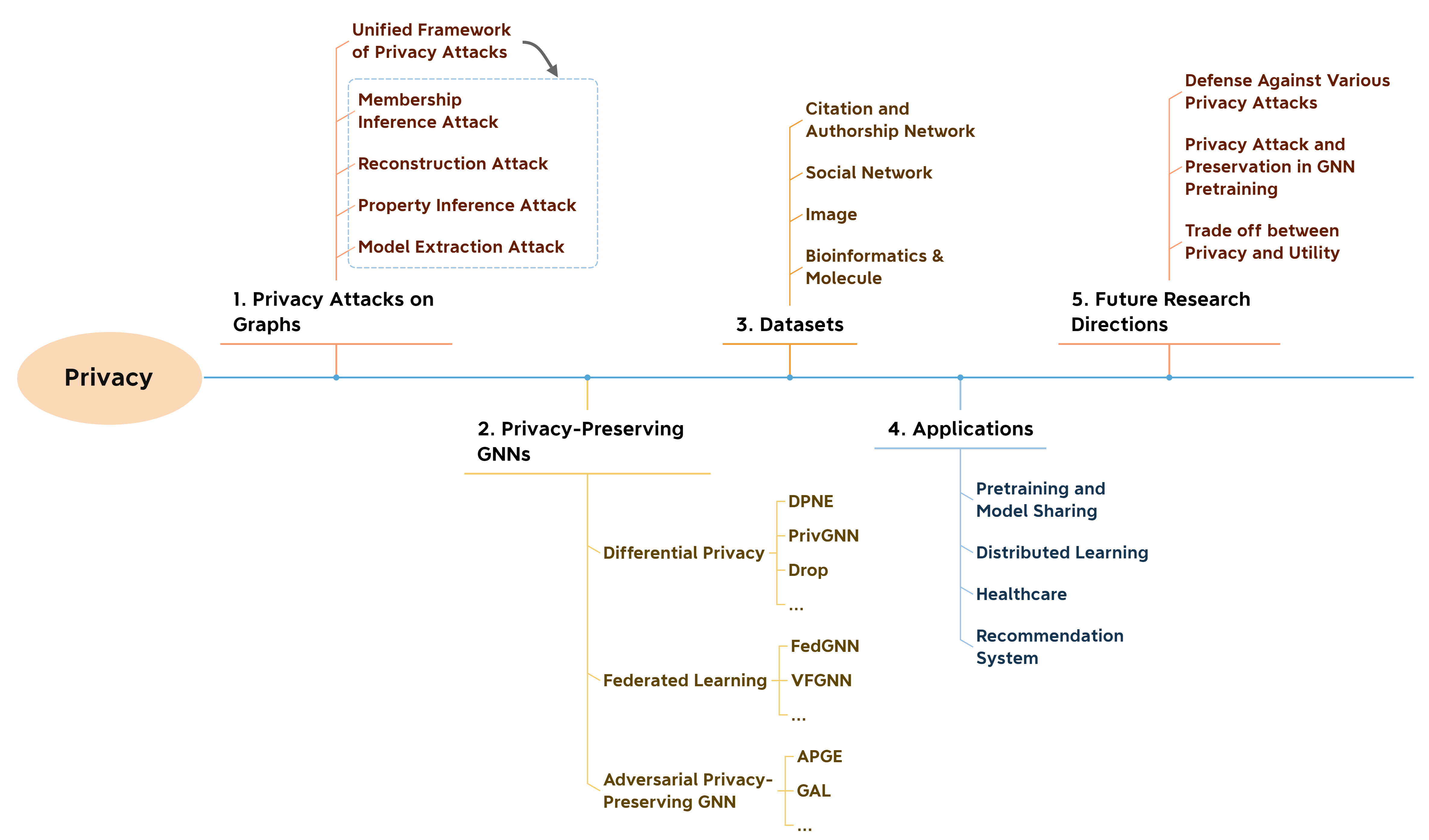

Figure 1. The structure of the privacy section (Sec.3) in the survey.

As it is mentioned, private information of users can be leaked from model release or the provided services due to privacy attacks. However, existing surveys are overwhelmingly dedicated to the privacy issue on models for independent and identically distributed (i.i.d) data such as images and text and the introduced methods cannot handle the graph structure and message-passing mechanism of GNNs. Thus, we give the overview of the privacy attacks on GNNs and privacy-preserving GNNs. The covered topics are shown in the Figure 1. We firstly formulate a unified framework of existing methods of the privacy attacks on graphs. Then, four types of the privacy attacks, i.e., membership inference attack, reconstruction attack, property inference attack, and model extraction attack, are introduced with details of corresponding methods. As for the privacy-preserving methods, we split them into three categories, i.e., differential privacy, federated learning, and adversarial privacy-preserving. We list part of the methods we discuss in the figure. For more methods and details please refer to the Section 3 in the survey paper. We also include related datasets in various domains and the applications of privacy-preserving GNNs. We find that defense methods against many privacy attacks on graphs such as property information attack and model extraction attack are less studied. Therefore, defense against various privacy attacks is one of the promising research directions. Some other directions are also discussed. The details can be found in our survey.

Robustness

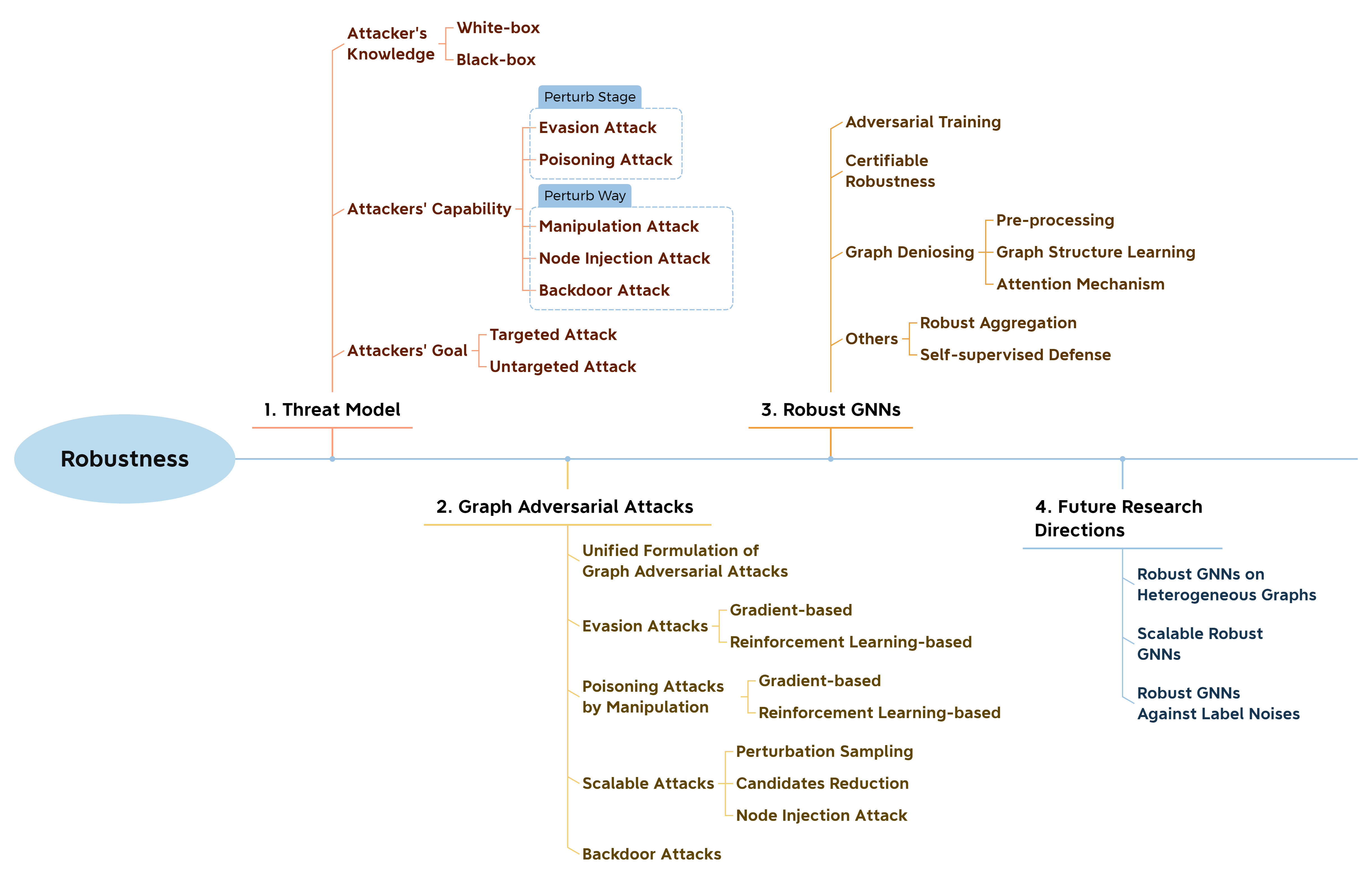

Figure 2. The organization of the robustness section (Sec.4) in the survey.

Robustness is another important aspect of trustworthiness. Due to the message-passing mechanism and graph structure, GNNs can be negatively affected by adversarial perturbations on both graph structures and node attributes. For example, fraudsters can create several transactions with deliberately chosen high credit users to escape GNN-based fraud detectors. This implies the necessity of investigating robust GNNs in safety-critical domains such as healthcare and financial system.

There are already several surveys about the robustness on graph-structured data. Therefore, in our survey, we focus more on the methods in emerging directions such as scalable attacks, graph backdoor attacks and recent defense methods. More specifically, perturbation sampling and perturbation candidate reduction are two directions that have been explored to improve the scalability of existing attack methods. In addition, node injection attacks whose time complexity of injecting one node is linear to the graph size is another promising direction. As for the graph backdoor attacks, we present few existing works in detail. We categorize existing robust GNNs against graph adversarial attacks as the Figure 2 shows. The defense with self-supervision is a new direction that is rarely discussed before. Therefore, we present methods in this direction such as SimP-GNN [1] in details. For other categories, we add most recent approaches such as RS-GNN [2], which simultaneously deal with the label sparsity and the adversarial perturbations . Some future research directions of robust GNNs are also discussed. More details can be found in Section 4 of the survey.

Fairness

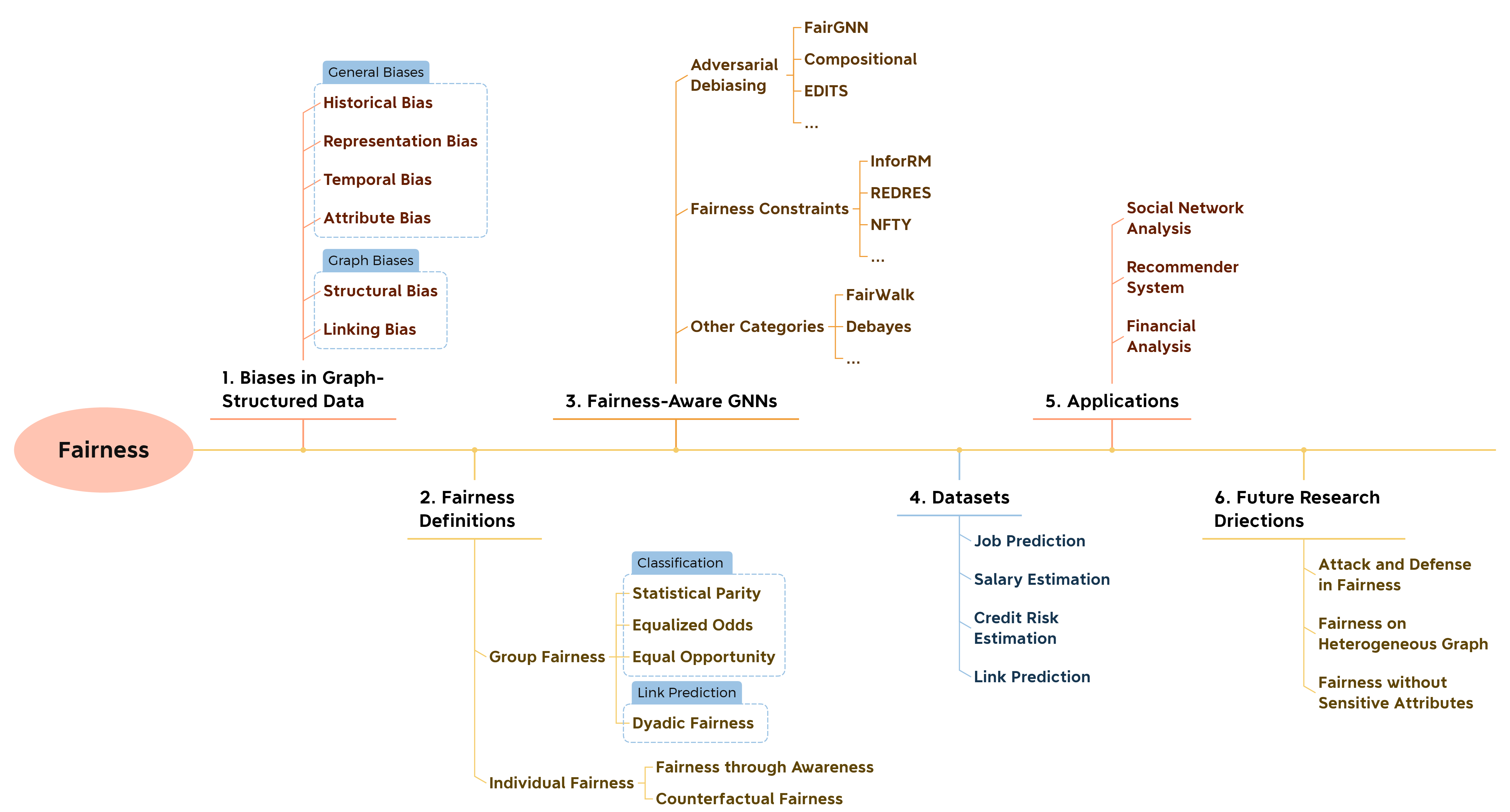

Figure 3. The organization of fairness section (Sec.5) in our survey.

Our recent research [3] has shown that the message passing mechanism of GNNs can magnify the biases compared with MLP, which verifies the necessity to investigate fair algorithms that particularly designed for graph-structured data. Recently, many works have emerged to develop fairness-aware GNNs to achieve various types of fairness on different tasks. Hence, we comprehensively review the fairness of graph neural network from various aspects, which are shown in the Figure 3.

Firstly, we discuss the potential biases in the graph-structued data, which contain biases generally existing on all formats of data and the unique biases caused by the graph topology. For the research in fairness, one of the most important questions is how to define the fairness in a mathematical way. We list the widely used fairness definitions in the literature about fair GNNs. Most of them are applicable for both i.i.d data and graph-structured data except the dyadic fairness which is designed for link prediction.

We categorize the fair GNNs into adversarial debiasing, fairness constraints, and other categories. Part of the representative methods are shown in the Figure 3. For both adversarial debiasing and fairness constraints, the unified framework and objective function are formulated. Then the detailed implementation and the target fairness of the existing methods are presented. The dataset for training and evaluating fair GNNs needs to contain users’ sensitive attributes, which can be difficult to obtain. Hence, we list the used graph datasets in various application domains for fairness-aware GNNs.

Explainability

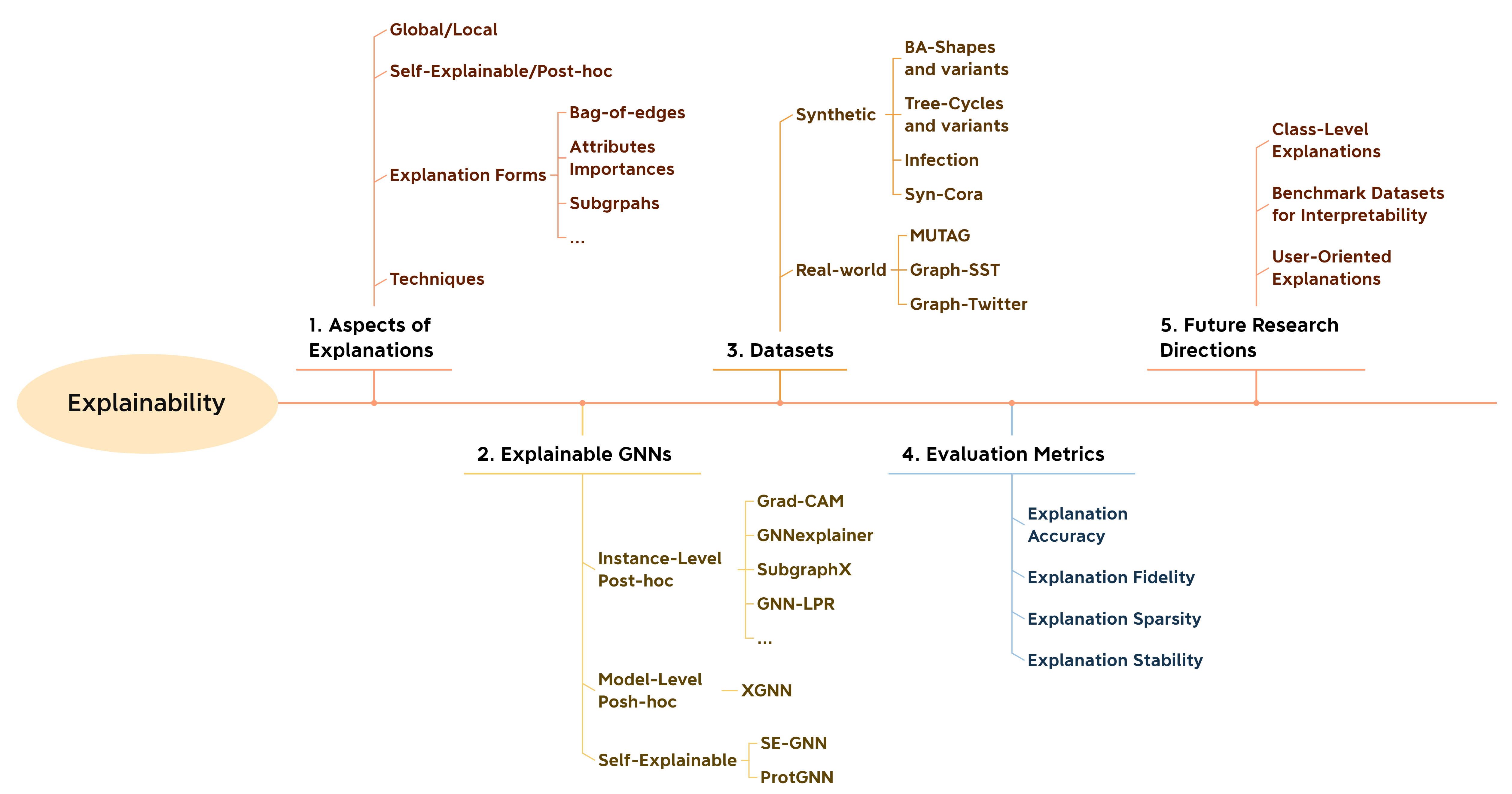

Figure 4. The organization of the explainability section (Sec.6) in our survey.

Due to the discreteness of complex graph structures and high non-linearity and the deployment of message-passing mechanism in GNNs, GNN models generally lacks interpretability. It is very important to develop explainable GNNs. The explanation can enhance practitioners’ trust in GNN. In addition, explanations can expose the knowledge captured, helping us to evaluate the existence of possible biases and adversarial attacks. Therefore, many efforts have been taken in explainable GNNs and we give a comprehensive review about the explainable GNNs. The overall organization of the explainability section is presented as the Figure 4 shows. We firstly discuss the categorization of the explanations in GNNs. Then, we categorize the explainable GNNs to instance-level post-hoc, model-level post-hoc, and self-explainable methods. It’s worth mentioning that self-explainable GNNs is a new direction that have not been reviewed by the previous survey. We give adequate details of the self-explainable GNN methods such as SE-GNN [4] and ProtGNN [5]. For other groups of methods, we further give a lower-level categorization based on the implemented techniques. Since the ground-truth explanations are very hard to obtain in the real-world graphs, the datasets and evaluation metrics are also big challenges in verifying the correctness of the learned explanations. And benchmark datasets for explainability can be promising future research direction in the research about the explainability of GNNs. More details can be found in our survey paper.

References [1] Jin, Wei, Tyler Derr, Yiqi Wang, Yao Ma, Zitao Liu, and Jiliang Tang. “Node similarity preserving graph convolutional networks.” In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, pp. 148-156. 2021.

[2] Dai, Enyan, Wei Jin, Hui Liu, and Suhang Wang. “Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels.” Proceedings of the 15th ACM International Conference on Web Search and Data Mining. 2022.

[3] Dai, Enyan, and Suhang Wang. “Say no to the discrimination: Learning fair graph neural networks with limited sensitive attribute information.” Proceedings of the 14th ACM International Conference on Web Search and Data Mining. 2021.

[4] Dai, Enyan, and Suhang Wang. “Towards Self-Explainable Graph Neural Network.” Proceedings of the 30th ACM International Conference on Information & Knowledge Management. 2021.

[5] Zhang, Zaixi, Qi Liu, Hao Wang, Chengqiang Lu, and Cheekong Lee. “ProtGNN: Towards Self-Explaining Graph Neural Networks.” arXiv preprint arXiv:2112.00911 (2021).

[6] Dai, Enyan, Tianxiang Zhao, Huaisheng Zhu, Junjie Xu, Zhimeng Guo, Hui Liu, Jiliang Tang, and Suhang Wang. “A Comprehensive Survey on Trustworthy Graph Neural Networks: Privacy, Robustness, Fairness, and Explainability.” arXiv preprint arXiv:2204.08570 (2022).